Monitor your web site uptime and response time from multiple geographical locations

We have established a global monitoring network by partnering with leading hosting providers. Our network currently includes servers in 50+ different geographical locations. We will be adding more monitoring locations as we go along.

Below is a continent-wise listing of our monitoring locations along with their IP addresses. |  | ||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

Kami monitor Server, Aplikasi, Exchange, Active Directory, Helpdesk, Environment hingga Machine Anda ! Kontak kami: 021-29622097/98 HP: 08121057533

Tuesday, May 27, 2014

Site24x7 memonitor website Anda dari IP-IP ini.

Site24x7 memonitor website Anda dari IP-IP ini.

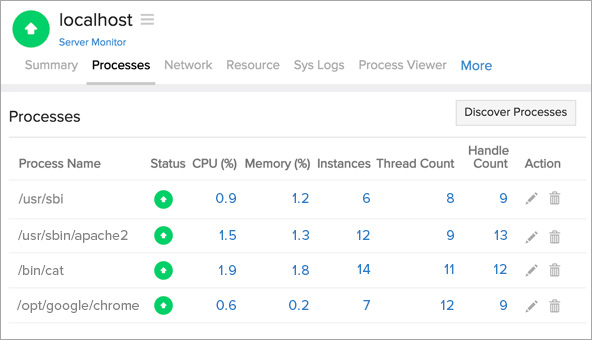

Site24x7 untuk monitoring internal server

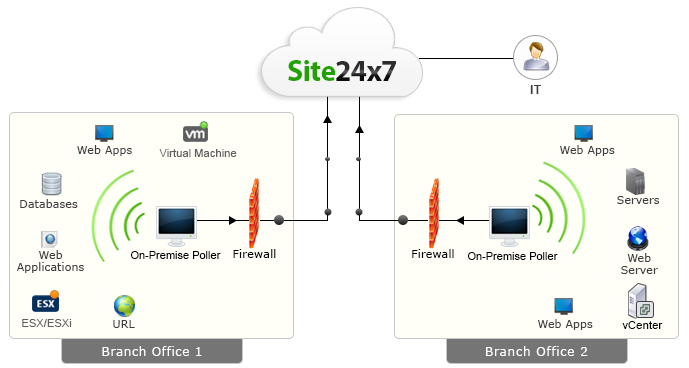

Site24x7's On-Premise Poller

Today's workforce uses both internet and intranet applications to get work done. The Site24x7 On-Premise Poller complements Site24x7'sglobal monitoring network of servers by allowing you to install a lightweight, stateless, auto-updating software component in your internal network or private clouds and monitor your mission critical resources along with internet facing websites.

|

What can it do?

Site24x7 On-Premise Poller can help you monitor ping network devices, servers, application servers, database servers, intranet portals, ERP systems, payroll applications and ensure other custom applications are up and performing optimally. In short, it can help you monitor resources behind the firewall.

This is possible by support for network protocols and services like HTTPS, SMTP, POP, IMAP, DNS, Port and Ping. Additionally, its flexible architecture can also help you monitor the end user experience of your web applications as experienced by employees at your branch office. This capability is complementary to the agent based server monitoring and public cloud monitoring capabilities of Site24x7. |

How does it work?

The On-Premise Poller is a lightweight, stateless, auto-updating software component that you download and install in your internal network.

This software then helps you monitor internal resources and pushes performance and uptime information to Site24x7 using a cloud friendly architecture (one way HTTPS). No holes in the firewall!

It can also be installed in various branch offices and used to monitor user experience from that location. Since the installed software report to the central Site24x7 servers, you can view consolidated dashboards and reports. |

Why should I try it?

|

How do I get started?

|

Monitoring server dari cloud via Site24x7

|

Monday, May 26, 2014

Monitor Apapun dengan PRTG

Monitoring of Things:

Exploring a New World of Data

We love technology. It's what drives our developers. It's what drives our product. It's what drives our marketing. We try to avoid jumping on the bandwagon of every new IT buzzword that's out there—at least if it isn't directly related to a possible application for our customers. That's why we've carefully kept an eye on the Internet of Things (IoT) trend and tried to determine if it's a topic that might offer substantial benefits for our customers in the years to come. We don't know yet if IoT will live up to the hype, but we are convinced that it will have an impact on the way we experience IT—and the time to get prepared is now.

Internet of Things basically means everything is connected, with all its advantages and disadvantages. For the network administrator of the future, this rising complexity will come with a whole new set of challenges. Forget about BYOD (Bring Your Own Device) and start thinking about BYOT (Bring Your Own Thing)—and "thing" could be, if you follow recent IT media articles, everything from a coffee machine to a car that's parked in the underground parking lot of a company. Especially wearables (electronic devices you wear on your body) seem to be one of the first trends within this almost infinite area of applications.

Who Monitors the Monitor?

Wearables often are intended to be used for extended healthcare purposes, for example monitoring a patient's pulse or heart-rate. If there's a sudden drop in the heart-rate, an ambulance could automatically be informed, find the patient via GPS signal and hopefully save his life—but what happens if the software crashes, the device gets disconnected or is simply turned off? The recipient of the body monitoring data also has to be informed about the status of the wearable. You have to monitor the device in order to being able to guarantee a constant flow of reliable body monitoring data. For hospitals equipping their patients with these kinds of wearables, the integration into their IT environment is only a first step. They also have to think about updating their network monitoring strategy. Remember, everything will be connected.

One of the biggest challenges will be to integrate a very heterogeneous group of devices into an already existing network structure. This is even truer for companies in industries with a huge scope of possible "things" to integrate into their network.

The Intelligent Industrial Network: Industry 4.0

Also in the manufacturing industry wearables that, for example, measure the noise exposure of factory workers could be a realistic scenario—but that's just the beginning: imagine assembly lines that are never affected by unplanned downtimes, maintenance work that can be scheduled to an exact point in time, and spare parts that arrive even before a replacement is necessary. No more warning lights that only flash after an error has occurred or after a part has exceeded its life cycle.

This development is known under different terms like Smart Manufacturing or Industrial Internet—in Germany it is called Industry 4.0, which refers to the fourth industrial revolution and was initiated as a project in the high-tech strategy of the German government. The goal is to create intelligent networks along the entire value chain, which can control each other autonomously. Although we might yet stand at the beginning of this revolution, it's important to start planning for the future now. Especially the sensible integration with the existing IT infrastructure should not be taken lightly. What's happening to the data that gets picked up off the machines? It has to be added to the central IT system in order to enable further processing, useful display and a basis for maintenance workers to take action. Monitoring things, in this case complex industrial machines, isn't so different from monitoring network devices—what matters is getting relevant data that can be analyzed and put to a purpose.

The Evolution of Monitoring

As with the different stages of the industrial revolution, also IT is an area that never stops evolving—and network monitoring is a big part of this development. When the concept first was introduced, the technology mostly was used to monitor physical IT devices (Monitoring 1.0), like routers or switches. With the ongoing virtualization of networks, new concepts and functionalities had to be found (Monitoring 2.0) in order to gather and process new kinds of relevant data. The next logical step was to run applications in the cloud and even further extend the virtualization. To enable users of SaaS (Software as a Service) solutions and other cloud applications constant access to their productive environment, the connection to the cloud has to be closely monitored (Monitoring 3.0)—this includes the monitoring of services and resources from every perspective to guarantee the smooth operation of all systems and connections within the cloud.

Besides the necessity to continue monitoring all devices, virtual machines and cloud based applications, which have been monitored in the past, the Internet of Things also launches a new era in network monitoring (Monitoring 4.0) as with every new thing connected to the network, also the amount of data that can and should be monitored, is constantly growing. Due to the heterogeneous nature of "things" and applications, many of which we probably even can't think of today, it will be difficult to have an out-of-the-box solution that covers every possible scenario. Applying custom sensors is a feasible solution, already used by many of our customers. In the past we've seen users of PRTG Network Monitor being on the forefront of creativity, using custom sensors to monitor prawn farms, pellet stokers or even the blood fridge of a hospital—all not being network devices, but "things". We're learning about new applications almost every day and are excited about this development. Based on our customer's feedback, we're constantly improving PRTG and implementing new features, so that when the time has come, our customers will be able to monitor every "thing", they want.

If you can think of an exciting thing to monitor, or even have written a custom sensor yourself, let us know: mot@paessler.com

Friday, May 16, 2014

ISACA memperkenalkan Cybersecurity Nexus

Network World - Every organization that has recently tried to recruit and hire qualified information security professionals knows it’s a tough environment for hiring. The demand for cybersecurity professionals has grown more than 3.5 times faster than the demand for other IT jobs over the past five years and more than 12 times faster than the demand for all other non-IT jobs, according to a recent report from Burning Glass Technologies. Current staffing shortages are estimated between 20,000 and 40,000 and are expected to continue for years to come.

This week ISACA (www.ISACA.org) is launching the Cybersecurity Nexus (CSX)program to address this shortage and to help IT professionals with security-related responsibilities to “skill up.”

For more than 40 years, ISACA has been a pace-setting global organization for information governance, control, security and audit professionals. The membership organization is well known for its COBIT business framework for the governance and management of enterprise IT. This framework is based on the latest thinking in enterprise governance and management techniques, and provides globally accepted principles, practices, analytical tools and models to help increase the trust in, and value from, information systems.

Now ISACA is building on this foundation with the Cybersecurity Nexus program to help bridge the skills gap between IT governance and information security. ISACA is seeing rapid growth among its 115,000 members in assuming security practitioner responsibilities. However, a recent member survey indicated that many of these people don’t feel they have the necessary knowledge and skills to prevent advanced persistent threats (APTs) from causing harm to their organizations.

CSX is designed as a comprehensive program that provides expert-level cybersecurity resources tailored to each stage in a cybersecurity professional’s career. Today the program includes career development resources, frameworks, community and research guidance such as Responding to Targeted Cyberattacks and Transforming Cybersecurity Using COBIT 5.

There is also a Cybersecurity Fundamentals Certificate that is aimed at entry level information security professionals with 0 to 3 years of practitioner experience. The certificate is for people just coming out of college and for career-changers now getting into IT security. The foundational level knowledge-based covers four domains:

1. Cybersecurity architecture principles

2. Security of networks, systems, applications and data

3. Incident response

4. Security implications related to adoption of emerging technologies

2. Security of networks, systems, applications and data

3. Incident response

4. Security implications related to adoption of emerging technologies

The exam will be offered online and at select ISACA conferences and training events beginning this September. The content aligns with the US NICE framework and was developed by a team of about 20 cybersecurity professionals from around the world.

As time goes by, ISACA will be adding more to the CSX program, including:

• Mentoring Program

• Implementation guidance for NIST’s US Cybersecurity Framework (which incorporates COBIT 5) and the EU Cybersecurity Strategy

• Cybersecurity practitioner-level certification (first exam: 2015)

• Cybersecurity Training courses

• SCADA guidance

• Digital forensics guidance

• Implementation guidance for NIST’s US Cybersecurity Framework (which incorporates COBIT 5) and the EU Cybersecurity Strategy

• Cybersecurity practitioner-level certification (first exam: 2015)

• Cybersecurity Training courses

• SCADA guidance

• Digital forensics guidance

The membership and strong community aspect of ISACA is what differentiates this program from other training and certifications offered by groups like Cyber Aces, SANS Institute and ISC2. What’s more, those programs tend to focus on the true infosec domain, whereas ISACA’s initiative is coming at cybersecurity from the business risk element and the risk proposition of cyber computing. CSX is a single “place” for people to go to and get what they need to advance not only their organization’s cybersecurity posture but also their own careers.

“We’re trying to tie the business ecosystem together, down from the corporate strategy, to the business architecture and execution, and ultimately to the cyber risk and putting in the appropriate preventative controls,” says incoming ISACA president Rob Stroud. “But more importantly with cyber is how you deal with an attack after it happens because you can’t prevent everything. This is where we see our place in this domain and it is certainly our starting position.”

According to Stroud, part of where ISACA is going with this program is with practical publications that give guidance on putting appropriate controls in place. “What we are doing now in this changing, disruptive world is developing practical guidance and making it available in a form that the practitioner can actually interact with it based on roles and responsibilities. This will be coming down the road a little way,” says Stroud.

For more information about ISACA’s Cybersecurity Nexus program, visit www.isaca.org/cyber.

Linda Musthaler (LMusthaler@essential-iws.com) is a Principal Analyst with Essential Solutions Corp. which researches the practical value of information technology and how it can make individual workers and entire organizations more productive. Essential Solutions offers consulting services to computer industry and corporate clients to help define and fulfill the potential of IT.

Identitas dan access management untuk 10 juta user ?

Identity and access management for 10 million users? No sweat!

IT Best Practices Alert By Linda Musthaler, Network World

May 16, 2014 10:54 AM ET

May 16, 2014 10:54 AM ET

Sponsored by:

Network World - What do you do when you are asked to build an identity and access management (IAM) system that can handle up to 10 million individual identities? You build it in the cloud, of course, where the dynamic elasticity can support the rigorous demands of the system.

That’s precisely what the North Carolina Department of Public Instruction (NCDPI) had in mind when it put out a request for proposal (RFP) seeking a vendor that could build and maintain a cloud-based IAM system that would accommodate every student, teacher, staff member and parent/guardian within the state’s more than 250 school districts and charter schools.This IAM system is a critical piece of a state-wide initiative called NCEdCloud. The primary objective of NCEdCloud is to provide a world-class IT infrastructure as a foundational component of the North Carolina educational enterprise. Rather than leaving the job of building and maintaining a separate IT infrastructure to each and every individual school district, the state is using a Race to the Top federal grant to build one massive infrastructure to serve all. When fully built out, NCEdCloud will provide for:

- Equity of access to servers and storage resources.

- Efficient scaling according to aggregate North Carolina K-12 usage requirements.

- Consistently high availability, reliability and performance.

- A common infrastructure platform to support emerging instructional and data systems.

- A sustainable and predictable operational model.

+ ALSO ON NETWORK WORLD K-12 schools can make the grade with identity and access management +

NCDPI awarded the contract to build and maintain NCEdCloud-IAM to Identity Automation of Houston. In large part, the decision to award the project to Identity Automation was based on this vendor’s ability to prove its abilities in a working proof of concept.

“The PoC was a real test for the prospective vendors,” according to systems architect Sammie Carter, the state’s Service Manager for the NCEdCloud project. “Our project is quite sophisticated, so we wanted real proof that the vendor we selected could handle the work. We gave the bidding vendors millions of records of simulated data that matched the challenges of our real source data. Then we gave them changes to that data and asked them to run it through their system to see how it works. Basically we ran the prospective vendors through the wringer and had very specific requirements to show us that they truly understood our problem.”

Prior to this project, Identity Automation had mostly provided on-premise IAM systems for K-12 schools. Identity Automation has its own set of tools for identity federation for single sign-on, provisioning life cycle management, and for self-service and delegation. For the NCEdCloud project, Identity Automation had to port all of its tools and applications to run natively on Amazon Linux in order to run the NCEdCloud-IAM system on Amazon Web Service (AWS). Once that was done, the vendor was able to build the system that can support up to 10 million identities at once.

Identity Automation set up three components of their application, each in its own subnet on Amazon EC2. Here’s a quick look at each of these components and what they do.

Lifecycle provisioningThis part of the IAM solution is driven by Identity Automation’s Data Synchronization System (DSS). This application is what takes data from multiple source databases and brings all these identities together in one database to provision and maintain accounts in Active Directory and key applications required by the state, including Google Apps for Education, Discovery Education, Follet Destiny, Zscaler and Central Directory Local Replica.

The source data – the information about students, teachers, and other users of NCEdCloud – comes from the 250+ school districts across the state. There are hundreds of files in a wide range of formats. Identity Automation consolidates all of this data, normalizes it and puts it into a “person registry” database where it is cleansed, de-duplicated, checked for missing required values, and so on. The “clean” data is then put into a central Active Directory instance on EC2 where it is the authoritative source used to drive the provisioning of accounts in applications and services and to authenticate people as they login. Successfully getting to this point, especially with the enormous volume of data, has been a huge achievement.

Identity Automation has scheduled jobs that regularly check the source files for changes that need to cascade into Active Directory and the various educational applications. For example, if a student changes schools, his identity needs to be updated to reflect his new school, and he must be provisioned for the right resources and applications according to his new profile.

Single sign-on

The state has a list of various cloud-based applications it wants to make available to every school district. To simplify access to these and other locally chosen applications, Identity Automation was charged with setting up single sign-on capabilities to the apps. The vendor uses its Federated Identity Management System (FIMS) to enable single sign-on. If an end user initially goes to an application, say Google Apps for Education, he gets redirected to a branded login page that the user knows as My.NCEdCloud, where he enters his credentials. Identity Automation authenticates the user with a SAML identity provider via FIMs. From there he is redirected back into Google Apps.

Once the user is authenticated, if he doesn’t close his browser, he can go right into other applications without having to login again. FIMS takes care of all the authentication work behind the scenes, making the user’s process to access applications much more streamlined.

Self-service and delegation

People forget passwords all the time, and it’s time consuming to ask a help desk to reset them. So, Identity Automation built in self-service capabilities so that students and other users can reset their own passwords if necessary. Additionally, teachers and local administrators can be delegated the capability to reset passwords and perform other minor maintenance duties on behalf of their students. Identity Automation uses its Access Request Management Systems (ARMS) to enable self-service and delegation.

The Importance of Building IAM in the Cloud

The elasticity of the cloud is a crucial factor in being able to build and operate NCEdCloud-IAM. Consider what happens each weekday morning when students and teachers across the entire state of North Carolina login to access their educational applications for the day — all at approximately the same time. Everyone needs to authenticate using the same service, and it’s important that performance not be an issue.

Identity Automation takes advantage of Amazon’s Elastic Load Balancer service, which distributes transactions across three availability zones on the East Coast. For each of the three component services I discussed above, Identity Automation has a minimum of three instances running at any given time. When utilization reaches a specified threshold, three more instances are automatically spun up in a matter of minutes to maintain desired performance levels. The service can keep adding instances as demand peaks, and then just as quickly drop the instances when demand tapers off—all automatically, and all within minutes.

Identity Automation also utilizes the Amazon Relational Database Services. This web service makes it easy to setup, operate and scale the relational database in the cloud. Everything is automatically clustered and highly available with automatic failover. This makes something that is usually very complicated and difficult to support very simple for Identity Automation.

NCEdCloud-IAM isn’t yet supporting the full 10 million users it expects to reach in a few years, but with the system being built on Amazon EC2 platform, there’s no concern about scalability, performance and availability.

Learn more about NCEdCloud at here and about the NCEdCloud IAM project.

Subscribe to:

Posts (Atom)

Map Security needs to DevSecOps tools in SDLC.

Map Security needs to DevSecOps tools in SDLC. Implementing DevSecOps effectively into the SDLC involves adopting the right tools, adaptin...

-

http://blog.dayaciptamandiri.com/2014/03/lagi-51-free-system-and-network-tools.html Wednesday, February 26, 2014 : Recently, we did a ...

-

Event Management: Reactive, Proactive or Predictive? August 01, 2012 by Larry Dragich Can event management help foster a curiosit...